Short answer: No software can guarantee recovery of a specific file.

Practical answer: you can verify your chances in advance—for free—by scanning with Disk Drill Basic and using the built-in Preview. If the exact file opens in Preview, you can upgrade with confidence and complete the recovery.

TL;DR

- ✋🏻 No guarantees: once data is overwritten or trimmed, it’s gone.

- 🔎 Verify first: run Disk Drill Basic, scan, and Preview the exact file. Successful Preview is the green light to upgrade and recover.

- 🗃️ Your odds depend on: storage type (HDD vs SSD/TRIM), file system, time since loss, post-loss activity, file size/fragmentation, encryption, and device health.

- 🤩 Best practice: stop using the device; if possible, make a byte-to-byte backup and scan the image, not the failing drive.

Why “Guarantee” Isn’t Possible (and How You Can Still Get Certainty)

Disk Drill can only read what still exists on the medium. If the blocks that used to hold your file have been overwritten (e.g., by a full format on modern Windows) or the SSD has purged them via the TRIM command, those bytes are physically/firmware-level gone. No consumer or professional software can reconstruct them.

That’s why Disk Drill focuses on verifying recoverability before you pay:

- Disk Drill Basic uses the same scanners as the paid version.

- You can preview found files to confirm the content is intact—not just the filename.

How Disk Drill actually finds your file

Disk Drill uses multiple scanners optimized for different scenarios:

1. Quick Scan (Metadata-First)

Follows file system records (MFT on NTFS, catalogs on HFS+, directory structures on ext4/APFS/Btrfs) to pull files—names, paths, timestamps, and all—as long as the metadata still points to valid clusters/blocks. It’s ideal for recent deletions where metadata hasn’t been reused.

2. Deep Scan (File System Carving)

Also called metadata carving or structure-aware carving, this mode dives below the normal directory view and parses orphaned or partially damaged file-system structures directly from raw space. Instead of trusting the live directory tree, Disk Drill hunts for the file system’s building blocks and reconstructs what it can.

3. Deep Scan (Signature Carving)

When both the directory tree and most metadata are gone, Disk Drill switches to content-aware recovery. It scans raw bytes for file signatures (headers/footers/structures) and carves out files by format (JPEG/PNG, DOCX/PDF, MOV/MP4, ZIP, SQLite, etc.). This can resurrect content after reformats or severe corruption, but you typically lose original names and paths, and highly fragmented large files may come back only partially if some fragments are missing.

4. Advanced Camera Recovery (ACR)

Purpose-built for cameras’ memory cards, ACR reconstructs fragmented recordings (think long 4K clips from GoPro, DJI, Sony, Canon, etc.) by analyzing container structure and camera-specific layout patterns—so it can reassemble MP4/MOV (and related assets) even when file-system metadata is missing or corrupted. Introduced in Disk Drill 6, it targets the exact fragmentation behaviors typical of action cams, drones, and dashcams. ACR runs a multi-stage pipeline—identifying initial fragments, confirming camera/cluster parameters, generating base video files, then rebuilding low-res proxies (LRV/LRF) before final high-resolution video reconstruction. This staged approach helps order fragments correctly and validate results as it goes.

5. Lost Partition Search

When the partition map itself is damaged or replaced (e.g., accidental “Initialize disk,” corrupted MBR/GPT, resized/deleted volumes, or a quick reformat), Disk Drill’s Lost Partition Search sweeps the entire device for volume and file-system signatures—backup GPT headers, MBR traces, NTFS boot sectors, exFAT boot/FSInfo blocks, HFS+/APFS headers, and ext superblocks (including secondary copies). It cross-checks offsets, sizes, and checksums to reconstruct plausible partitions, then exposes each as a virtual found volume so you can run Quick or Deep scans against it as if the partition still existed. This is especially effective after partition-table damage or accidental deletion; however, if the area was overwritten, TRIMed (on SSDs), or encrypted (BitLocker/FileVault) without the proper keys, only limited signature carving may be possible.

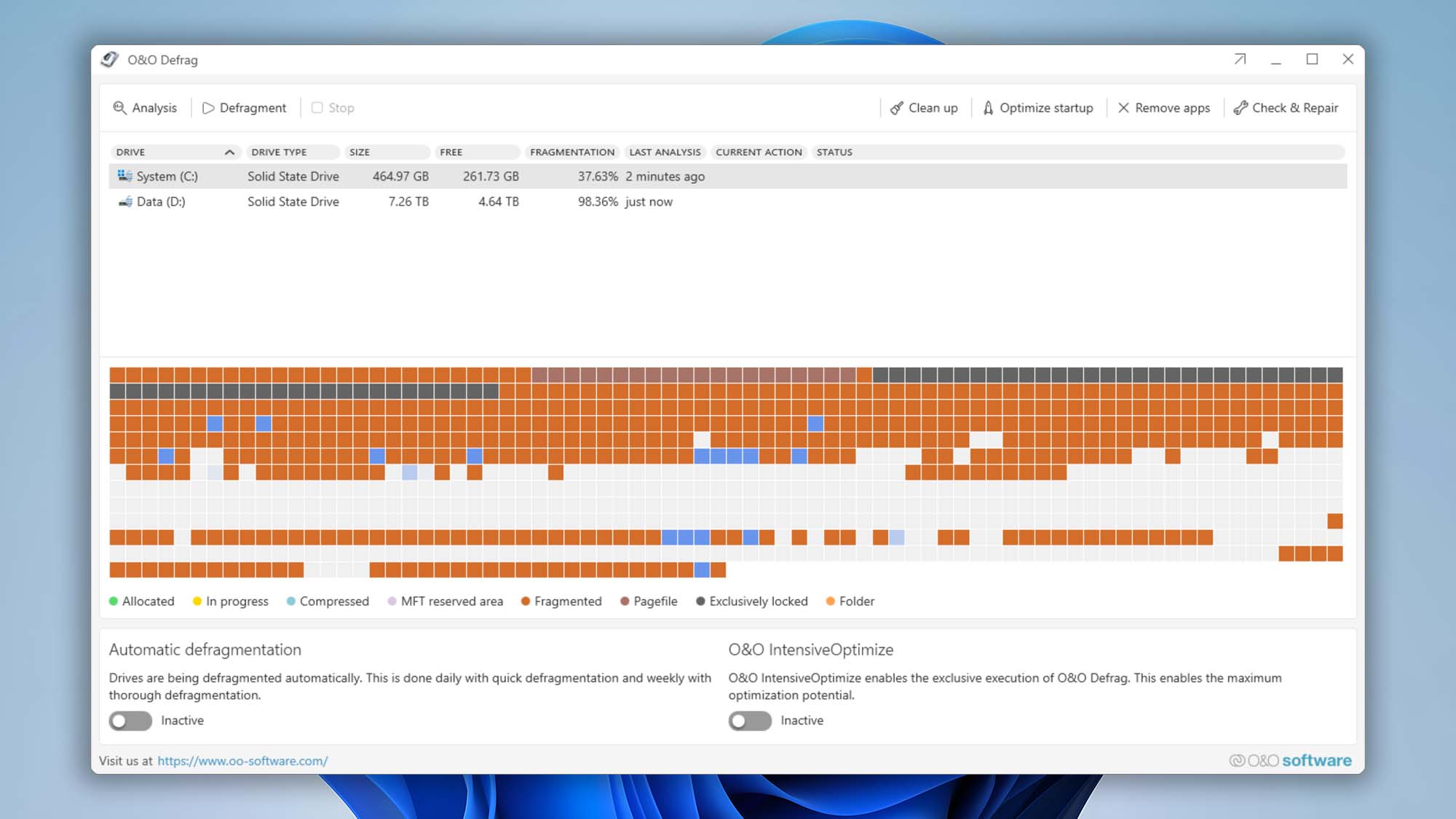

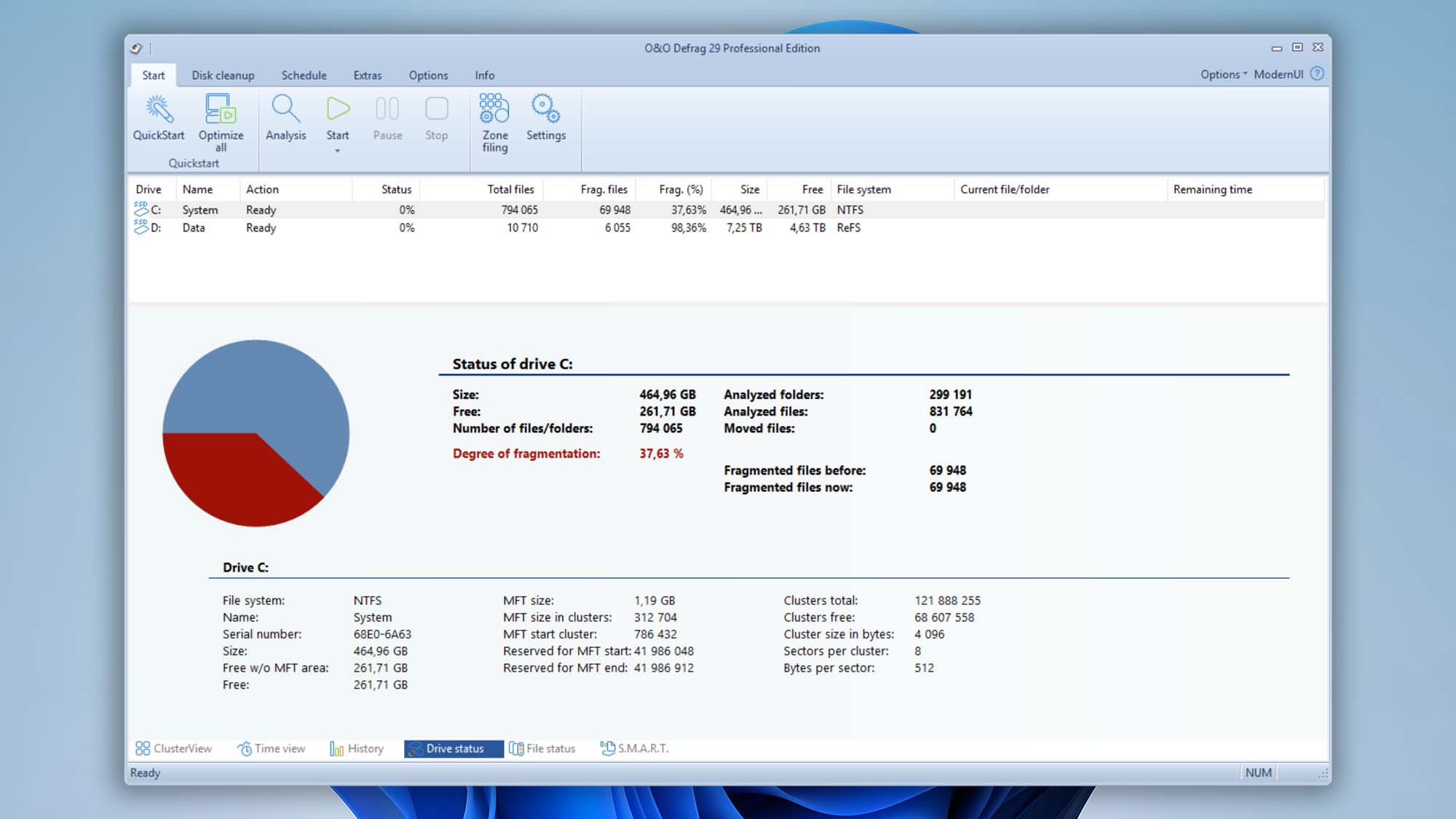

Hard Things, Simple Words

So why is a file stored in fragments, and why are these file fragments so scattered? As files are added to a hard drive over time, more and more space is taken up. Imagine that you have a completely full hard drive, but you then delete five different files, each of which takes up 100 MB. You now have 500 MB free. But the free space is not contiguous. When you now want to save a 200 MB file, there is no empty area that is large enough by itself. So your computer splits the 200 MB file in half and stores it in two of the 100 MB spaces. In real-world conditions, there is much more fragmentation!

Imagine having ten different jigsaw puzzles (each puzzle representing a file), and then mixing all of the pieces together. So when you apply a data recovery application such as Disk Drill, the application looks for the “addresses” in the file system that tells it how to put each puzzle together. If these addresses are found, Quick Scan can quickly produce results.

But file recovery is often not that easy. If the address information is gone for a deleted file (this happens more slowly on the FAT file system, but more quickly on NTFS and HFS/HFS+), there’s no way to know which fragments match each other. Another cause of problems is that individual areas on the hard drive can physically “go bad”, making their data inaccessible. So, in the case of that 200 MB file stored in two fragments, we would have a problem if one fragment was on a part of the hard drive that went bad: only half of the file would be readable.

Deep Scan is made for precisely such cases. Signature analysis allows Deep Scan to look for files based on their structure: Disk Drill knows how a file “should” look. Microsoft Word files have a different structure than Adobe Illustrator files, which have a different structure than iTunes music files, and so on. These structures give each kind of file a specific signature. With Deep Scan, Disk Drill goes through the accessible fragments, combining them in ways that make sense based on the signature of the file that you’re looking for. In good conditions, most or all of the fragments can be recovered. But sometimes, the file system does not even know where the fragments are: in these cases, unfortunately, there is no way to retrieve the information.

As a side note, large files have more fragments and require that more information about these fragments be stored in the file system, thus making more places where things can go wrong. This means that large files are less likely to be recovered completely and correctly, and are more likely to encounter corruption problems during recovery attempts.

As you can see, an awful lot in hard drive recovery depends on chance. And this is only the tip of the iceberg. You can read more about other Variables that Impact File Recovery Chances here. That’s why we strongly recommend keeping regular backups, which will take the risk out of keeping your data safe.

The Right Way to Check (Before You Buy)

- 🛑 Stop using the affected device immediately. New writes may overwrite your file’s blocks.

- 💾 Create a byte-to-byte backup (optional but ideal). In Disk Drill, make a byte-level image of the disk and scan that image to avoid stressing a failing device.

- ⚙️ Install Disk Drill Basic on a separate drive (not the one that lost data). Run a full scan.

- 👁️🗨️ Use Preview on the exact file you care about. If Preview renders the content correctly, proceed to upgrade and recover. (Disk Drill can also display estimated recovery chances to help you triage large result sets.)

Special “Game-Over” Cases You Should Know About

- Full format on Windows (Vista and later): writes zeros across the volume—erasing recoverable content. (Quick format ≠ full format.)

- Secure erase / multi-pass overwrite / DoD-style wipes: modern guidance shows a single proper overwrite suffices for contemporary disks; once overwritten, data isn’t coming back.

- SSD TRIM after delete/format: often purges the data blocks quickly; once trimmed and cleaned, Deep Scan will not find meaningful content.

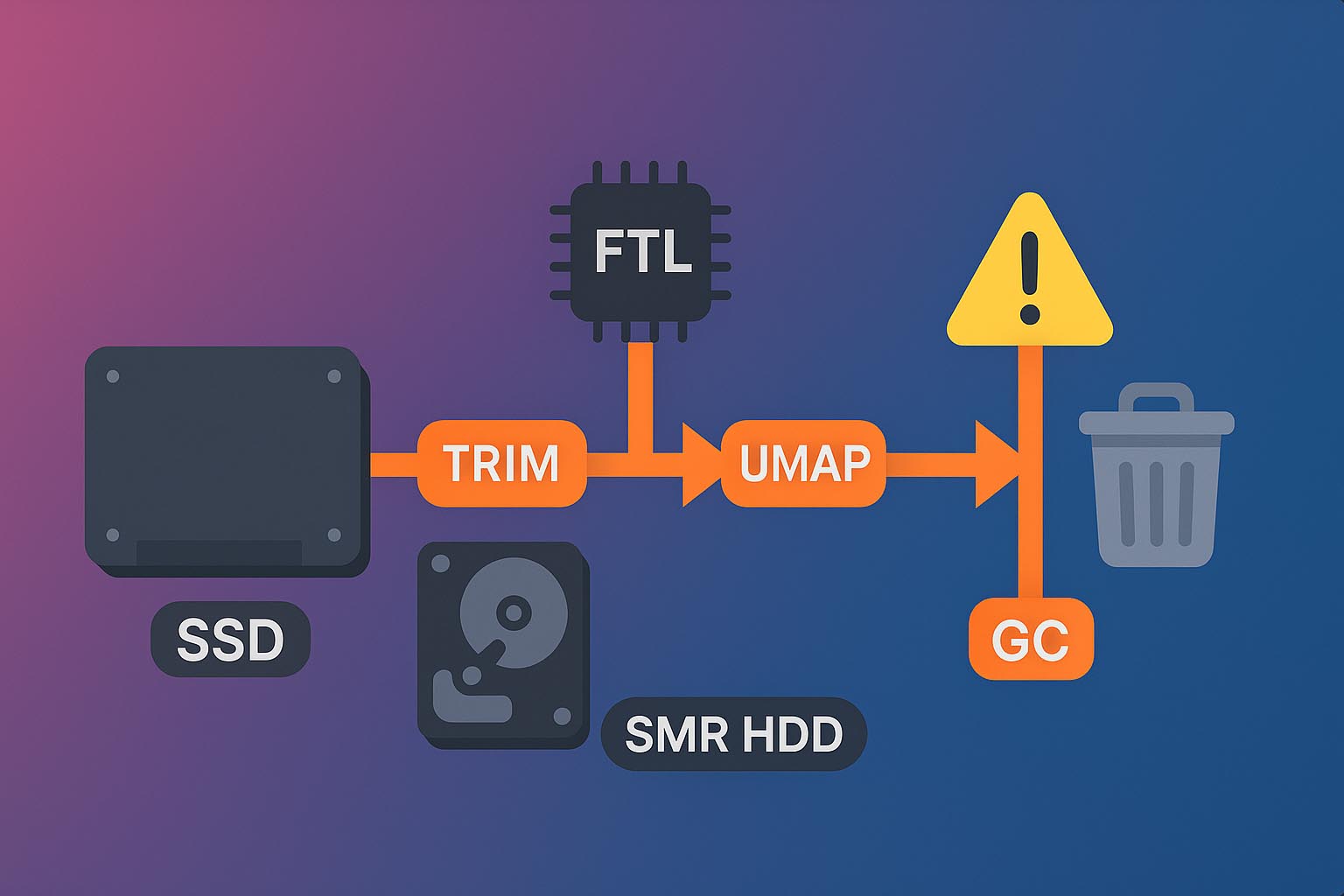

TRIM and Data Recovery: Why SSDs (and Some SMR HDDs) Change the Game

TRIM (and its cousins like SCSI UNMAP on certain SMR hard drives) lets the file system tell the device which logical blocks are no longer in use. The drive’s internal flash translation layer (FTL) immediately marks those blocks free in its mapping tables, prepping them for background cleanup (garbage collection) to keep write speeds high and wear low. Great for performance and longevity—brutal for data recovery.

Here’s the catch: after TRIM updates the mapping, those logical blocks are treated as empty. Many devices even return zeros for trimmed ranges before they’re physically erased. Recovery tools can’t see or traverse to the old data anymore because the references are gone at the device level, not just the file system level. When garbage collection eventually wipes the underlying pages, the original bytes are physically removed—making post-delete or post-format recovery effectively impossible in most real-world cases (timing varies by vendor, model, and media type).

File deletion (TRIM-enabled devices)

After you delete a file, the OS marks its logical blocks as free and quickly issues a TRIM/UNMAP to the device. The SSD’s flash translation layer (FTL) updates its mapping to mark those blocks invalid and schedules them for garbage collection. The physical cleanup can start immediately or be deferred—from seconds to days—depending on the controller, firmware, workload, and idle time.

There’s only a **brief window—often just a few seconds—**before the FTL processes TRIM. If power is cut before the mapping update, software recovery may still see the old data; once TRIM is honored, the device typically returns zeros for those ranges and standard tools can’t access the content. In specialized labs, engineers may attempt controller tech-mode/chip-off procedures to read raw NAND and rebuild the mapping, but success is not guaranteed and can be blocked by controller-level encryption, wear-leveling, or later garbage collection that physically erases the pages.

Formatting (TRIM-enabled devices)

A format rebuilds the file system’s metadata and the OS promptly issues TRIM/UNMAP for the ranges that used to hold your files. The SSD’s FTL (flash translation layer) marks those logical blocks invalid and places them in the garbage-collection queue. Depending on the controller, firmware, workload, and idle time, cleanup may begin immediately or be deferred for minutes, hours, or longer.

After the device honors TRIM, standard recovery tools will see those ranges as empty (often reading back zeros). If power is removed before garbage collection erases the cells, a specialized lab might attempt tech-mode/chip-off imaging to read raw NAND and reconstruct data—results are uncertain and depend on controller encryption, wear-leveling history, and how much the drive has been used since formatting. Once garbage collection completes, the wiped pages are irrecoverable by software or hardware means.

Metadata damage (TRIM-enabled devices)

If metadata or file-system structures are corrupted, the OS can’t reliably determine which logical blocks are safe to free, so it doesn’t issue TRIM/UNMAP for those ranges. The SSD’s FTL mapping continues to treat the affected blocks as in use, and garbage collection leaves them alone until valid instructions arrive (e.g., after a successful repair or reformat).

Because TRIM isn’t executed, the underlying data often remains physically intact even though the volume won’t mount or files appear missing. This creates a window where Disk Drill can parse orphaned records (MFT entries, APFS/Ext B-trees) or fall back to Deep Scan (signature carving) to reconstruct content. Results depend on how extensive the corruption is and the file system involved. Important: avoid running repair tools (e.g., chkdsk, fsck, first-aid) before imaging—they may “fix” structures by freeing space and trigger TRIM, permanently destroying recoverable data.

What Is a File System (and Why It Matters for Recovery)

A file system (often written “filesystem” or FS) is the set of on-disk structures and rules your operating system uses to organize data and track free space on any storage device—HDDs, SSDs, USB drives, SD cards, you name it. Think of it as the library catalog that tells the OS what a file is, where its pieces live, and which spaces are still empty.

Sectors, Blocks, and Addresses (The Physical-ish Layer)

Storage can be treated as a long, linear address space: every byte sits at an offset from the start (its “address”). Devices read and write in minimum units called sectors (typically 512 bytes or 4096 bytes on modern drives). Above that, most file systems allocate space in larger units:

- Blocks / clusters / allocation units: groups of sectors (commonly 4 KB, 8 KB, 16 KB, etc.).

- Files are stored in whole blocks. A 10-byte file still consumes one 4 KB block; a 5 KB file consumes two 4 KB blocks, and so on.

On SSDs, there’s an extra indirection called the Flash Translation Layer (FTL) that maps these logical block addresses to the NAND pages and erase blocks underneath. That mapping affects recoverability later.

What the file system actually stores

Beyond your file’s content, an FS maintains metadata—the facts about the file and the map to its content:

- Allocation maps (bitmaps, free lists) to know which blocks are free vs. in use.

- File records that hold the file’s size, timestamps, attributes, and—critically—where its data lives (extents/runlists).

- Directory structures that link human-readable names to file records, forming folders and paths.

- Optional security and extras: permissions/ACLs, extended attributes, compression flags, and, on some systems, snapshots/journals.

Different file systems implement these ideas differently:

- NTFS (Windows): central MFT records, $Bitmap for allocation, journals, and “extents” for fragmented data.

- APFS/HFS+ (macOS): multiple B-trees (catalog, extents, object maps) for fast lookups and snapshots (APFS).

- ext4 (Linux): inodes + extent maps + journals for metadata consistency.

Why Different File System Types Exist

When you format a drive, you’re asked to pick a file system (also called a “format” or “type”). It’d be nice if one format fit every use case, but that’s not how storage works in the real world.

One Size Doesn’t Fit All

Every file system makes trade-offs among performance, reliability, features, and hardware fit. Some are tuned for general desktop use, others for flash media, huge volumes, snapshots, or fault tolerance. As storage tech evolves, newer designs add better scalability, crash resilience, and data services (checksums, compression, snapshots), while older ones stick around for compatibility.

Operating Systems Drive the Choice

File systems are tightly coupled to their OS ecosystem. Vendors prioritize their native formats and provide first-class drivers for them, while support for others can be limited or read-only.

- 🪟 Windows:

- 🍏 macOS:

- 🐧 Linux:

- 🦖 BSD/Solaris/Unix:

- UFS, ZFS (pooled storage, checksums, snapshots)

Diversity is Good—until You Move Data

This variety lets developers optimize for their platform and lets you pick what best fits your device and workload. The downside is interoperability: if an OS doesn’t natively support a format, it may fail to mount the volume or mount it read-only. That’s where specialized tools help you access or copy data across ecosystems.

Why file system variety matters for recovery

Each file system stores metadata differently (catalogs, inodes, B-trees, extents/runlists, journals). It also handles deletion, trimming, and block allocation in its own way. Effective recovery software must:

- Parse the target file system’s on-disk structures precisely.

- Reconstruct directories, names, timestamps, and extents where possible.

- Fall back to content-aware (signature) carving when metadata is gone.

Windows File Systems. How They Work and What It Means for Recovery

On Windows, file system choices are intentionally limited for compatibility and stability: NTFS is the default for modern versions of Windows, FAT (and its modern extension exFAT) remains common on removable media and cross-platform drives, and ReFS serves select server and high-resilience scenarios starting with Windows Server 2012. In the sections below, we break down how these formats work, how they compare for performance and reliability, and—most importantly—what each one means for data recovery and for other Windows storage technologies.

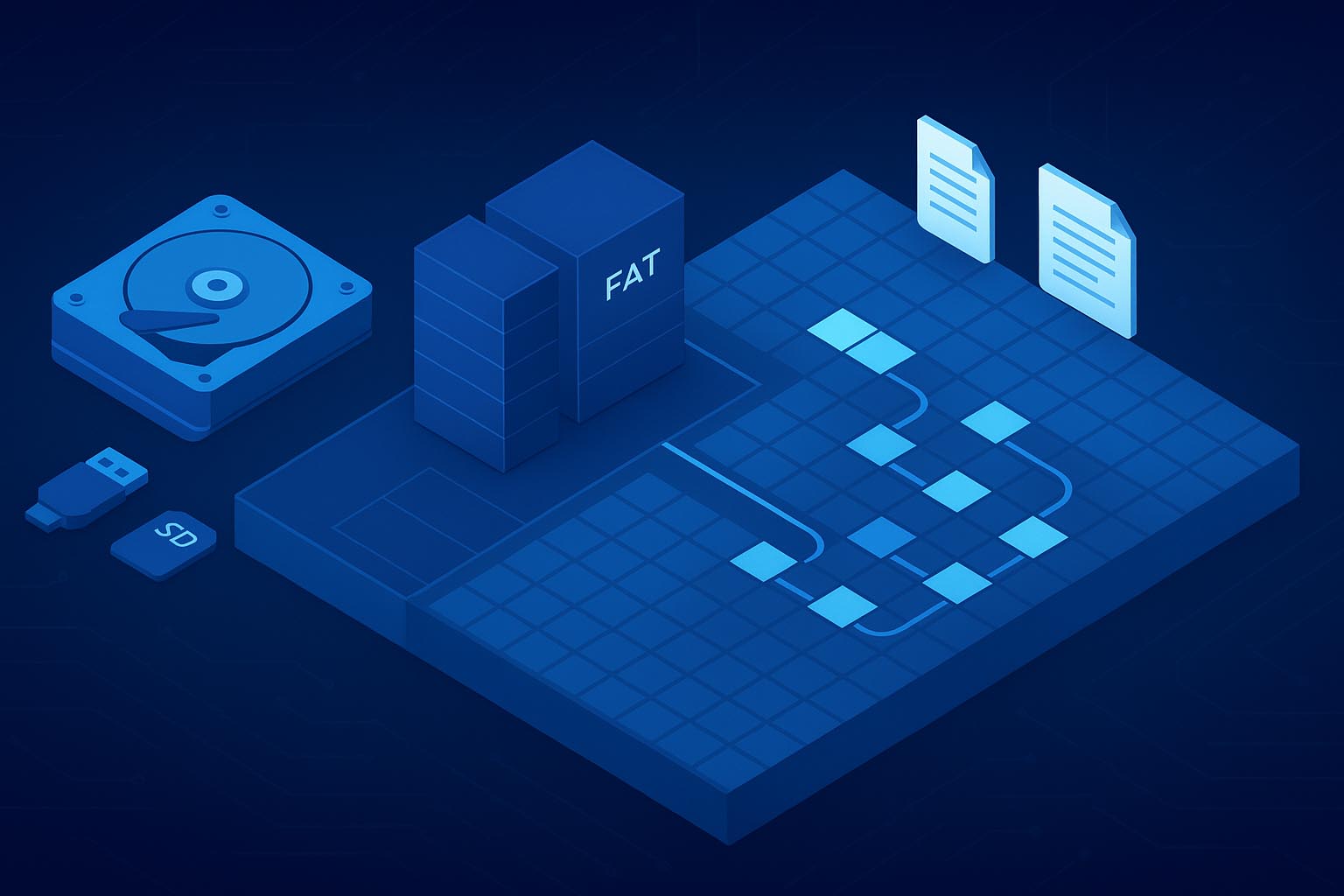

1. FAT and FAT32

FAT (File Allocation Table) is one of the oldest, simplest file systems still in common use. Originating in the MS-DOS era, it was built for small, removable media and extremely low overhead. Its later variants—FAT12, FAT16, and FAT32—mainly differ by how many bits they use to address clusters (12/16/32), which determines the maximum count of addressable clusters on a volume.

✅ Why it persists today:

- Compatibility: almost every OS and device understands FAT32 (cameras, game consoles, TVs, car stereos, etc.).

- Simplicity: tiny on-disk structures, minimal metadata, low CPU cost.

⛔ Where it falls short:

- No journaling or checksums, so corruption propagates easily.

- Max file size ~4 GB; not suited for 4K/8K video or large archives.

- Fragmentation accumulates quickly; large files span many extents.

On-disk layout (FAT/FAT32)

FAT-family volumes are split into three regions:

- Boot sector (Volume Boot Record): geometry, block size, and layout; on FAT32 also references FSInfo and root directory cluster.

- File Allocation Table(s): typically two copies for redundancy. Each FAT entry corresponds 1:1 to a cluster in the data area and stores either “free,” “end-of-file,” or a pointer to the next cluster in that file’s chain.

- Data area (cluster heap): where file and directory content actually lives. Clusters are fixed-size groups of sectors (e.g., 4 KB, 8 KB, … up to 64 KB). A small file still consumes a whole cluster.

Directories are files composed of 32-byte directory entries describing each item (name, attributes, size, timestamps, first cluster). Long names are stored as VFAT extension entries.

- FAT12/16: the root directory occupies a fixed area near the FATs.

- FAT32: the root directory is a normal cluster chain (its first cluster is stored in the boot sector).

How Files Are Stored (and Fragmented)

If a file spans multiple clusters, the FAT forms a linked list of cluster numbers—its cluster chain. As the volume fills and frees space, new files land in gaps, so chains become non-contiguous (fragmented). The more fragments, the more things can go wrong during recovery if any fragment is overwritten or unreadable.

What Happens When You Delete a File (FAT/FAT32)

- In its directory entry, the first character of the filename is replaced with 0xE5 (the “deleted” marker).

- The file’s clusters are marked free in the FAT, breaking the chain.

- The file content remains in the data area until overwritten.

- Non-fragmented files: If nothing has been written since deletion, Disk Drill’s Quick Scan can often restore the file nearly perfectly because the directory entry still contains size and starting cluster.

- Fragmented files: Deletion breaks the FAT chain that linked the file’s clusters, leaving no pointers to the middle or final fragments. The directory entry usually survives, so you still have the filename, size, and first cluster. From there, Disk Drill can guess the remaining fragments using heuristics, but results are hit-or-miss and can’t be guaranteed.

What Happens When You Format (FAT/FAT32)

- A quick format recreates the boot sector/FSInfo and clears the FAT tables and Root Directory areas, making all clusters appear free.

- Data blocks in the cluster heap usually remain until overwritten (unless a full format writes zeros).

- Non-fragmented files: Names, paths, and starting clusters are gone, but Disk Drill’s Deep Scan (signature carving) can detect file signatures (JPEG, MOV, ZIP, etc.) and recover raw content. Metadata like original name/folder is typically not recoverable.

- Fragmented files: Without the FAT chains, predicting later fragments is hard; many large files will be partial or corrupted.

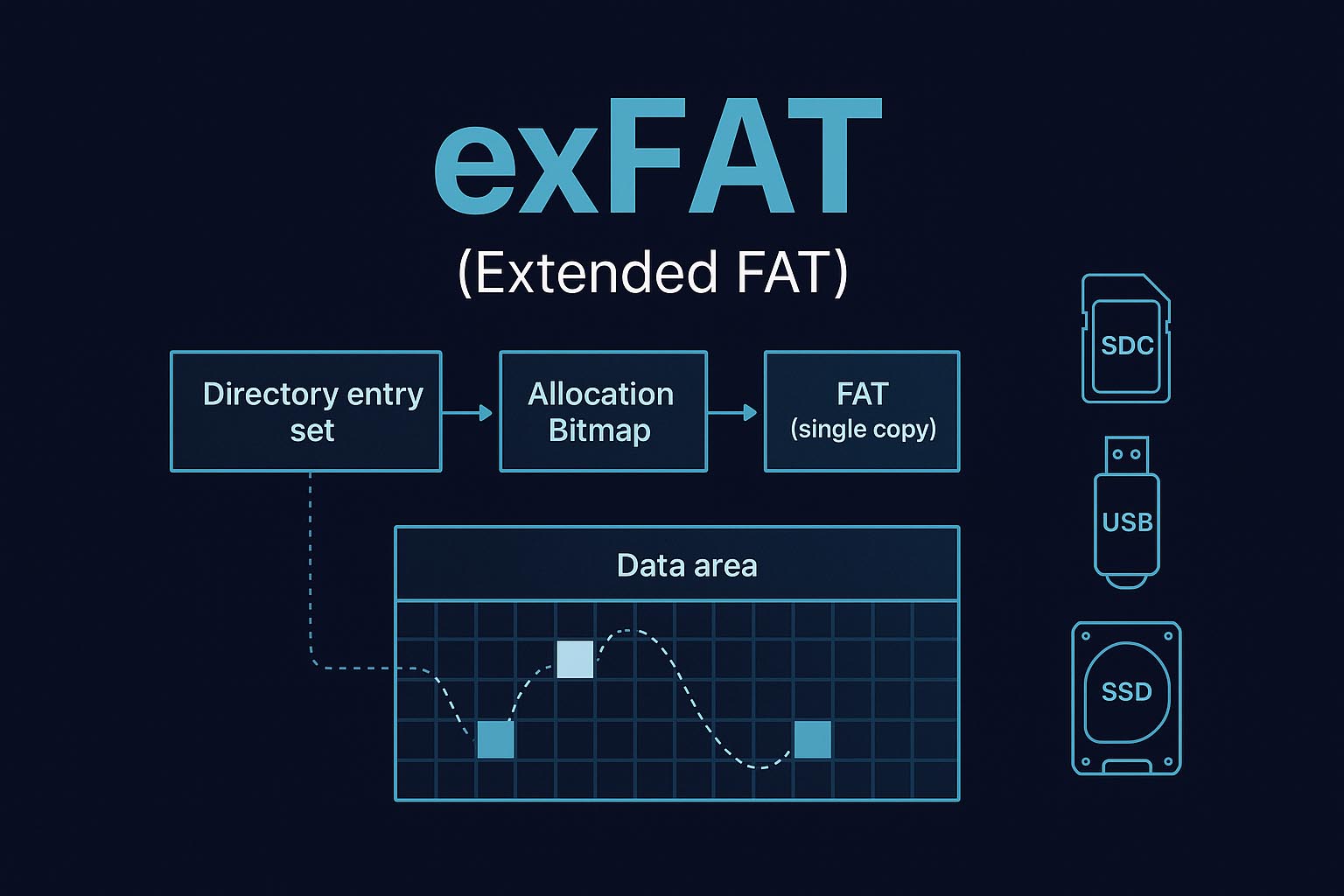

2. exFAT (Extended FAT)

exFAT is the modern successor to FAT32. It keeps the familiar “allocation table + data area” layout but updates how space is managed so it scales to very large files and volumes—a common choice for SDXC cards, USB flash drives, and external SSDs. Unlike FAT32, exFAT isn’t capped at 4 GB per file and typically maintains a single FAT. The FAT is consulted mainly to chain clusters for fragmented files; day-to-day allocation is handled elsewhere.

What’s Different Under the Hood

- Allocation Bitmap: Instead of marking used/free space inside the FAT itself, exFAT keeps a dedicated bitmap that flags each cluster as in use or free. This lowers write amplification on flash media and helps reduce fragmentation.

- Directory entry sets: File metadata lives in a set of directory entries (primary file entry + stream extension + long name entries). Among other things, these record the first cluster and valid data length for the file.

- FAT usage: The File Allocation Table still exists, but it’s mostly needed when a file spans multiple non-contiguous clusters. Many small or contiguous files never need to consult it after allocation.

What Happens When You Delete a File (exFAT)

- The file’s directory entry set is invalidated, and the Allocation Bitmap marks the file’s clusters free.

- The FAT chain (if the file was fragmented) is not always cleared immediately, so stale links may linger until those entries are reused.

- The payload data remains in the data area until it’s overwritten by new writes.

- Non-fragmented files: With directory metadata intact and clusters newly marked free, Disk Drill’s Quick Scan can often restore the file with original name and timestamps, provided nothing has overwritten the clusters.

- Fragmented files: The FAT chain may still reference the sequence of clusters, allowing Disk Drill to rebuild the file end-to-end. If those FAT entries are reused or damaged, the links vanish and only the first cluster is known. In that case, Disk Drill falls back to Deep Scan (signature carving) to reconstruct content; names/paths are usually lost. exFAT’s tendency toward lower fragmentation on flash media often improves carving success, but there’s no guarantee for heavily fragmented, long videos.

What Happens When You Format (exFAT)

- A quick format recreates the boot region, root directory, and Allocation Bitmap, effectively making the whole volume appear empty while leaving much of the old data in place.

- A full format may perform a surface wipe depending on the tool/device, which destroys recoverable content.

- Non-fragmented files: After a quick format, exFAT clears the directory and Allocation Bitmap but may leave parts of the FAT intact. If those FAT entries still contain links to the clusters that belonged to the deleted file, the single contiguous run can be reconstructed with high confidence and the content recovered intact (though original names/paths are gone). If those links have been reused—or the device issued TRIM or a zero-fill—recovery falls back to signature carving or may be infeasible.

- Fragmented files: Quick format removes the directory sets and the Allocation Bitmap/FAT context you’d need to reassemble multi-extent files. Without a valid FAT chain, Deep Scan can only carve first fragments it finds by signature; later fragments are hard to locate and order, so large videos/archives often come back partial or corrupted. For camera cards with long MP4/MOV clips, try Advanced Camera Recovery (ACR)—it can sometimes reassemble fragmented recordings when generic carving can’t.

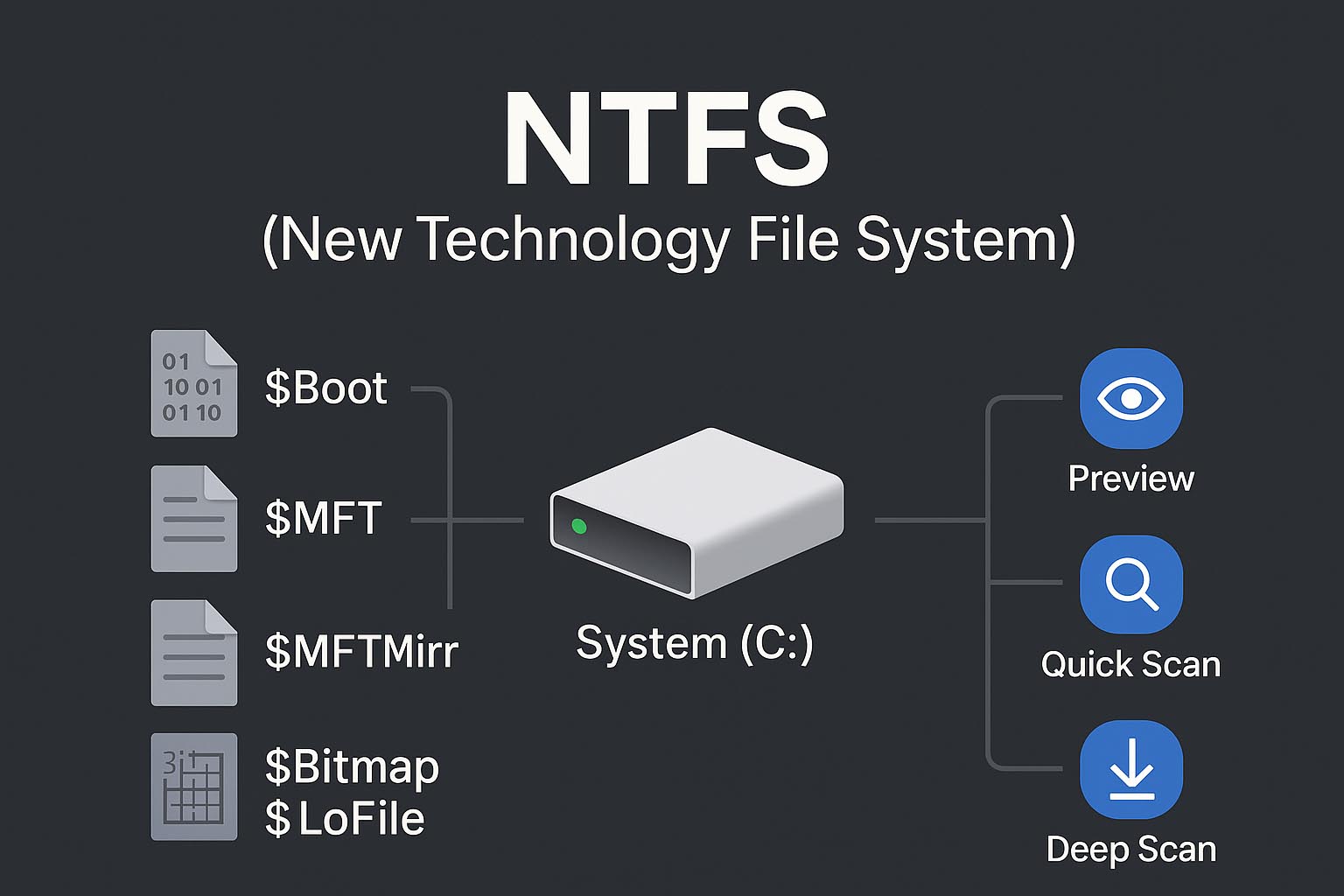

3. NTFS (New Technology File System)

NTFS (New Technology File System) debuted with Windows NT and is the default on modern Windows desktops and servers. It was a major step up from FAT/FAT32, adding reliability, security, and scalability.

Why NTFS is Different

- Metadata journaling: NTFS logs critical metadata changes in $LogFile, enabling fast recovery from crashes (it journals metadata, not full file contents).

- Security & features: ACLs/permissions, EFS encryption, compression, sparse files, hard links, reparse points, alternate data streams.

- Performance & scale: Large volumes, efficient allocation, and better fragmentation handling via extents.

Core On-Disk Structures (Special “System Files”)

$Boot– volume parameters used during boot.$MFT / $MFTMirr– the Master File Table and its small mirror; every file and directory has an MFT record.$Bitmap– cluster allocation map for the whole volume.$LogFile– metadata journal.$BadClus– tracks known bad clusters.- Other helpers:

$AttrDef,$UpCase,$Secure,$Extend(e.g.,$UsnJrnl).

Files, Directories, and Extents (the NTFS Model)

Each file or folder is described by a 1024-byte MFT record (default size) that contains attributes:

- $STANDARD_INFORMATION, $FILE_NAME (timestamps, names, IDs)

- $DATA (the content), which can be:

- Resident – tiny files stored inside the MFT record.

- Non-resident – content lives in clusters elsewhere; the record stores a runlist (sequence of extents) pointing to those clusters.

- Directories are files that hold B-tree style indexes (

$INDEX_ROOT,$INDEX_ALLOCATION, directory$BITMAP) mapping names to child MFT records.

Because NTFS tracks all extents in the MFT, even fragmented files can be precisely located—as long as their MFT records survive.

What Happens When You Delete a File (NTFS)

When you delete a file on NTFS, its MFT record isn’t erased—it’s simply marked unused, which means the record can be reused at any time. The clusters that held the file’s content are flagged free in $Bitmap, and the file’s entry is removed from its parent directory. The actual data usually remains on disk until new writes reuse those clusters.

Because the MFT record still retains the file’s name, size, timestamps, and storage location (runlist), data recovery software can typically reconstruct the file with high accuracy. If the clusters haven’t been overwritten, recovery success is close to 100%; once those clusters are reused, chances drop sharply.

What Happens When You Format (NTFS)

A quick format creates a new Master File Table, which overwrites the beginning of the previous MFT while leaving much of the old table farther on disk intact.

The metadata for the first 256 files is lost due to that partial overwrite, so those files can usually be brought back only via RAW (signature) recovery—without original names, directories, or other metadata. Files beyond that range often still have intact MFT records and can be recovered successfully (up to 100%), provided their data clusters haven’t been overwritten.

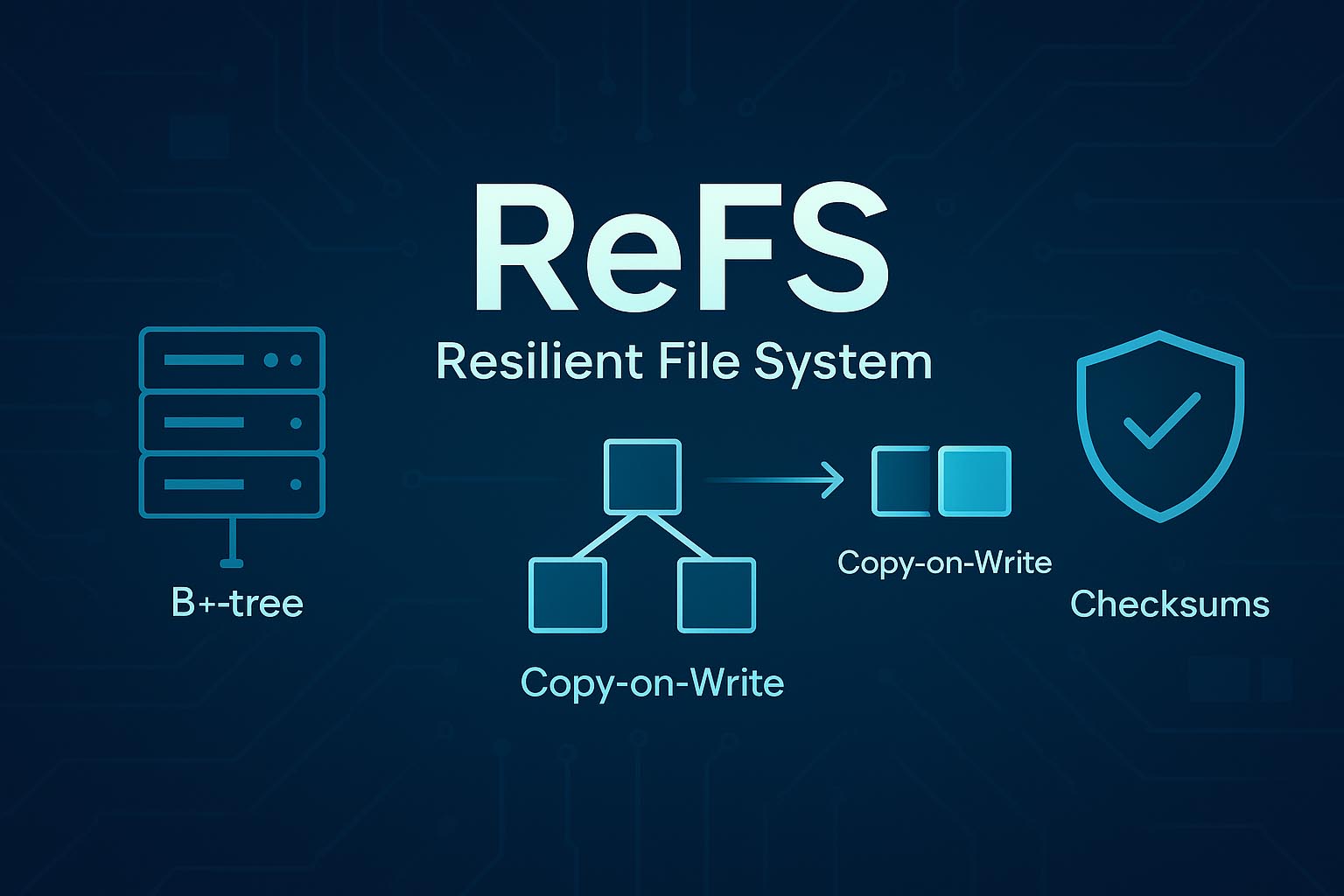

4. ReFS (Resilient File System)

ReFS is Microsoft’s integrity-first file system, introduced with Windows Server 2012 and later added to client Windows (including Windows 11 on supported editions). It targets large volumes and always-on systems where data integrity and fault tolerance matter more than legacy compatibility.

Design Highlights

- Copy-on-Write (CoW) metadata: ReFS never edits critical metadata in place. It writes an updated copy to new space and then atomically switches pointers. If a crash occurs mid-update, the older, consistent version is still present.

- Checksums & integrity streams: Metadata is protected with checksums; optional integrity streams extend protection to file data, allowing ReFS to detect corruption quickly and, with the right storage layer, auto-correct it.

- B+-tree everywhere: Both metadata and file data mappings live in B+-trees (root → internal nodes → leaves). Keys guide lookups; leaves contain either records (for directories and metadata) or pointers to file extents.

Directories and Allocation

- Directories are indexed trees, not flat lists. Directory objects store ordered keys that map names to file records (object numbers), enabling fast inserts/lookups even at massive scale.

- Extent-based layout. Like NTFS, files are stored in extents (contiguous runs of clusters). ReFS strives for large extents but will fragment when needed; the extent map lives in the file’s B+-tree record.

Reliability Plumbing

$LogFileequivalent via CoW semantics: Instead of journaling metadata in a separate log, ReFS relies on transactional CoW updates so structures remain consistent.- Bad-block handling: When the storage reports unreadable sectors, ReFS marks those locations and relocates data, preserving availability.

What Happens When You Delete a File (ReFS)

Deletion is just another CoW metadata update: a new version of the directory and file record is written that no longer references the file. The previous metadata version typically still exists on disk until space is reclaimed.

Because older metadata versions can remain, recovery software can walk prior tree nodes to rediscover the file’s records and extent map. If neither the metadata nor the data extents have been overwritten (or trimmed by the storage layer), the file can often be restored fully with original metadata. Once those older nodes/extents are reused, recovery falls back to signature carving of the raw data area and names/paths are usually lost.

What Happens When You Format (ReFS)

A quick format creates a fresh ReFS volume layout and new metadata roots (the B+-tree structures that describe directories, allocation, and file mappings). It doesn’t rewrite all user data blocks; instead, the new trees simply stop referencing the old ones. Because ReFS uses copy-on-write for metadata, older versions of those metadata nodes often still exist on disk until space is reclaimed, which is why some prior directory and file records can still be discovered after a quick format.

After a quick format, Disk Drill can parse orphaned ReFS B+-tree metadata to reattach files with their original properties when those older nodes and their data extents haven’t been reused; if only raw payload remains, recovery falls back to signature (content) carving without names or paths. After a full format (zero-fill), prior data is overwritten and recovery is generally not feasible.

macOS File Systems. Architecture and Its Impact on Recovery

Because Apple’s ecosystem is relatively closed, macOS gives you a focused set of formats rather than dozens of options. In the sections that follow, we’ll explain how APFS and HFS+ are organized, what that means for deleted files, formats, and snapshots, and how Disk Drill adapts its scans to each so you can make informed recovery decisions.

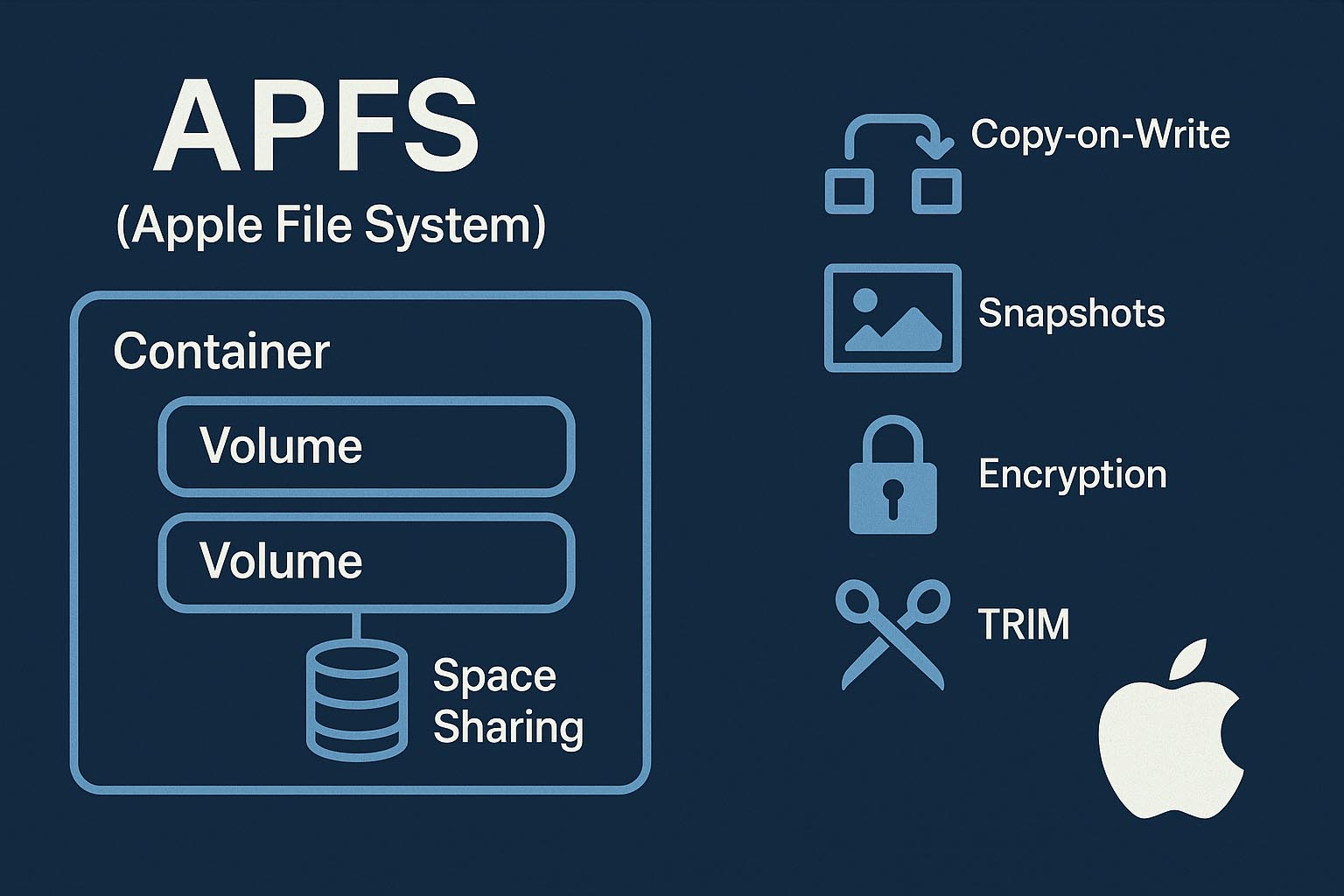

1. APFS (Apple File System)

APFS arrived in 2017 with macOS High Sierra (10.13) and now powers storage across Apple’s lineup—Mac, iPhone, iPad, Apple Watch, and Apple TV. It was designed first for flash/SSD media, fixes long-standing HFS+ limitations, and adds integrity and space-efficiency features.

Design Goals & Core Layout

- Container → Volumes model. A single APFS Container holds one or more volumes that share the same free space. The container’s superblock (entry point) records block size, block count, and other globals. A common container bitmap tracks which blocks are free vs. in use.

- Per-volume structures. Each volume has its own volume superblock plus independent trees for directories, files, and metadata. File and folder records are organized in B-trees (keys + values) for fast lookups and updates.

- Extents for file data. File content is stored in extents—start block + length. A dedicated extent B-tree maps every run of blocks that belongs to a file.

- Copy-on-Write (CoW). APFS never edits critical structures in place. Instead, it writes a new copy elsewhere and atomically switches pointers. This greatly reduces the chance of corruption from crashes or power loss.

What Happens When You Delete a File (APFS)

Deletion removes the file’s references from the volume’s trees (directory and allocation metadata). The space manager marks those blocks available for reuse, but the underlying data typically remains until it’s overwritten.

Because APFS uses CoW, older metadata versions may still exist on disk for a time, letting Disk Drill to reconstruct paths and extent maps. However, APFS also bakes in encryption at the volume level (and Apple strongly encourages it—often on by default on iOS devices and many Macs with FileVault). When encryption is active, both user data and key metadata are protected, which raises the bar for recovery once keys or prior metadata are gone.

What Happens When You Format (APFS)

A quick erase in Disk Utility creates fresh APFS metadata (new container/volume structures) and stops referencing the old trees; it doesn’t rewrite every user block. On SSDs, APFS supports TRIM, and macOS issues TRIM operations after space is reclaimed, which can prompt the drive to purge those freed blocks. If the volume is FileVault-encrypted, deleting/erasing the volume also destroys its encryption keys (a “crypto-erase”), rendering the prior data undecipherable even if blocks still exist.

After a standard (quick) erase, recovery may still locate orphaned APFS metadata and raw payload—unless TRIM has already reclaimed those blocks or the volume was crypto-erased. Once TRIM purges the freed space or keys are destroyed, software recovery is generally not feasible. Overwrite erases on HDDs likewise eliminate recoverable remnants.

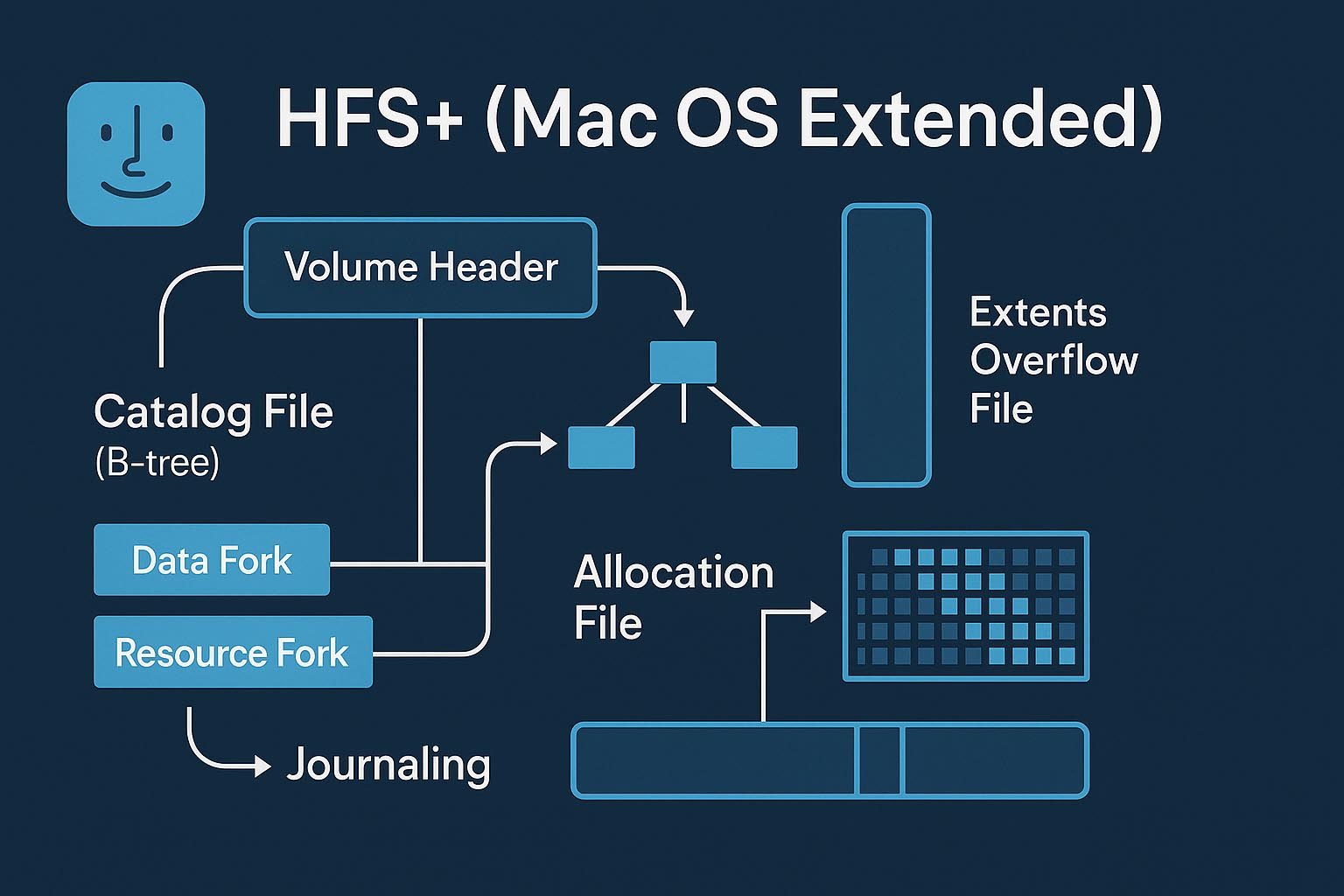

2. HFS+ (Mac OS Extended)

HFS+ (Hierarchical File System Plus), also known as Mac OS Extended, debuted with Mac OS 8.1 in 1998 and remained the default file system on Macs (and devices like iPod/Xserve) until APFS took over in macOS High Sierra 10.13. While aging, HFS+ still matters for backward compatibility and for accessing older Macs and legacy media.

Design Highlights & On-Disk Layout

- Volume Header: Lives at the start of the volume and stores global parameters plus pointers to all critical structures.

- Allocation model: Storage is divided into allocation blocks (optionally grouped into clumps to limit fragmentation). A bitmap-style Allocation File tracks which blocks are free vs. in use.

- Forks & extents: Each file can have a data fork (the actual content) and a resource fork (additional metadata/resources). Fork data is stored in extents (contiguous runs of allocation blocks defined by start + length).

- B-tree system files: Most metadata lives in specialized B-trees:

- Catalog File – directory hierarchy, names, timestamps, and the first eight extents of each fork.

- Extents Overflow File – extents beyond the first eight.

- Attributes File – extended attributes and extra per-file metadata.

- Journaling: A rolling Journal records metadata updates to help the file system recover quickly from crashes. It’s circular—older entries are eventually overwritten.

- Hard links: HFS+ supports hard links so a single file can appear in multiple directories; the Catalog stores link entries that reference shared content located in a hidden area.

What Happens When You Delete a File (HFS+)

Deletion updates the Catalog File (B-tree) to remove the file’s record and, for hard links, drops the link entry from its directory. The Allocation File flips the file’s blocks to free, but the payload typically remains on disk until reused. Journal entries may briefly reference the change until the journal wraps.

If Catalog and extent records survive, Disk Drill’s Quick Scan can reconstruct the file with its original name, folder, timestamps, and forks. If Catalog entries are gone or the journal has rolled over, recovery falls back to Deep Scan (signature carving). Carving can restore contiguous data but usually loses names/paths and struggles with heavily fragmented forks—especially large videos or archives.

What Happens When You Format (HFS+)

A quick format reinitializes core metadata (e.g., Catalog layout) to a default, effectively wiping prior directory records while leaving the data area and the Journal content on disk until reused.

Some metadata can be reconstructed by parsing residual journal and B-tree fragments; the remainder is recovered via signature carving from the data area. Success depends heavily on fragmentation and post-format activity. As always, Preview in Disk Drill Basic is your proof the content is intact before you finalize recovery.

Linux File Systems. Behind the Scenes and Your Recovery Chances

Unlike Windows and macOS, which are tightly controlled by Microsoft and Apple, Linux is an open-source ecosystem with countless distributions (Ubuntu, Fedora, Debian, Arch, etc.) and a kernel that supports a wide range of file systems. That flexibility is why you’ll see different defaults across distros—but in practice the most common Linux formats are the Ext family (Ext2/Ext3/Ext4), XFS, Btrfs, F2FS, JFS, and ReiserFS. In the sections that follow, we’ll outline how some of them work, their performance and integrity features (journals, copy-on-write, checksums, snapshots), and—crucially—what each design implies for data recovery with Disk Drill.

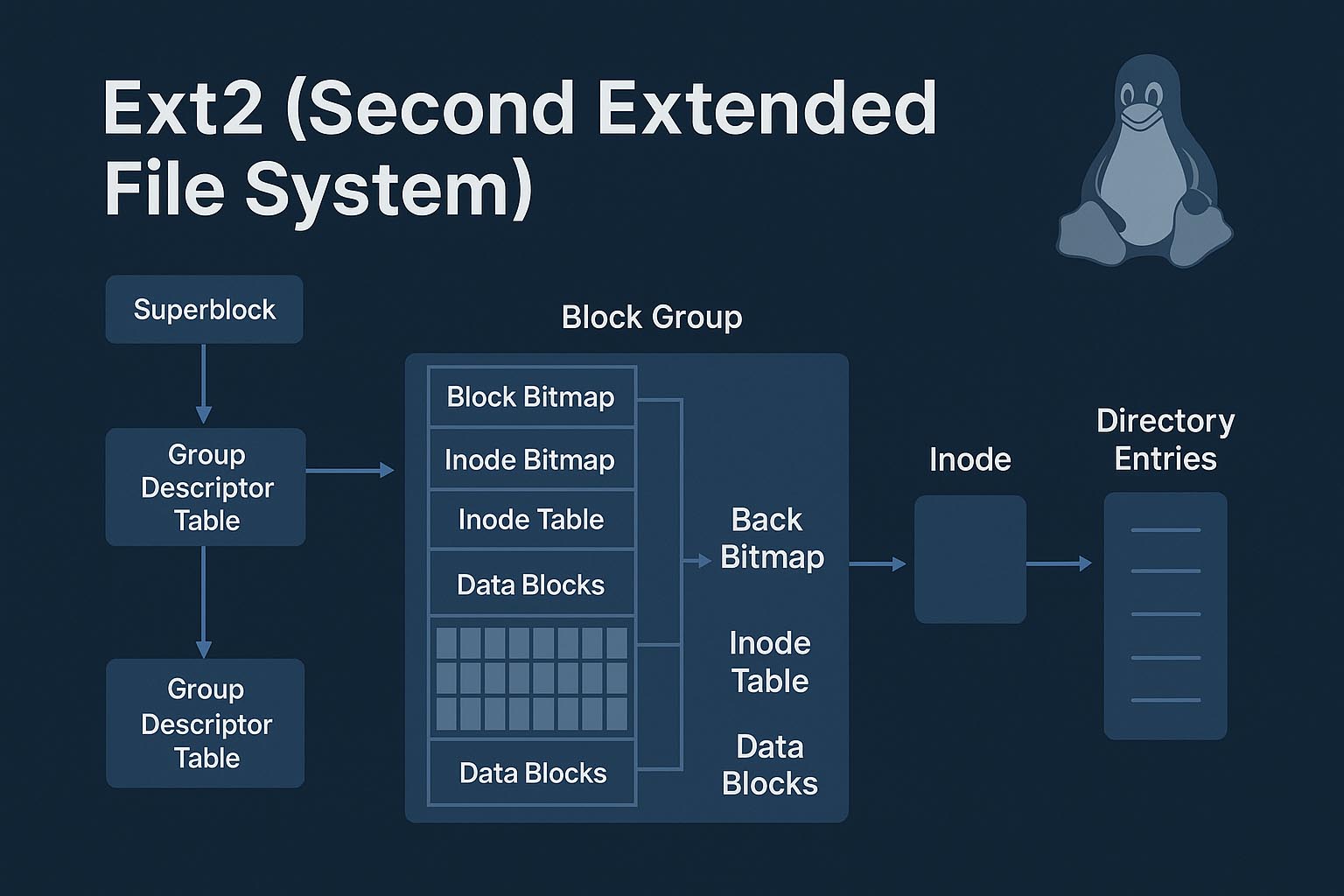

1. Ext2 (Second Extended File System)

Ext2 organizes space into fixed-size blocks, grouped into Block Groups for locality and faster allocation. Each group maintains a Block Bitmap (which blocks are free/used), an Inode Bitmap (which inodes are free/used), and an Inode Table that stores one inode per file or directory. An inode holds the object’s metadata (size, timestamps, permissions) and pointers to its data blocks. Filenames are not part of the inode—they live in directory files (regular files that store directory entries mapping name → inode number). Because names are aliases to inodes, a single file can have multiple hard links across the tree, which is convenient operationally but can complicate name reconstruction during recovery.

What Happens When You Delete a File (Ext2)

Deletion marks the file’s inode as free, flips its data blocks to free in the Block Bitmap, and removes the directory entry (name → inode) that linked the filename to the inode number. The payload typically remains on disk until new writes reuse those blocks.

- Non-fragmented files: If the inode hasn’t been reused and its block pointers still reference intact blocks, Disk Drill can usually rebuild the entire file content with high accuracy. The original filename and path are lost, since names lived only in the removed directory entry.

- Fragmented files: The inode still carries pointers to all fragments, so recovery can be just as accurate if every fragment remains. However, fragmentation raises the chance that some blocks were already overwritten, leading to partial results. Filenames/paths remain unrecoverable.

What Happens When You Format (Ext2)

A fresh Ext2 reinitializes superblock/group descriptors/bitmaps and typically clears or rebuilds inode tables, so prior directory/inode metadata is effectively gone even if user data bytes persist.

- Non-fragmented files: With inode and directory metadata wiped, recovery relies on Deep Scan (RAW signature carving) to detect contiguous file structures. You may recover content, but names/paths and many timestamps are lost.

- Fragmented files: Without inode maps, carving has no reliable way to stitch fragments back together. Large or heavily fragmented files are likely to be partial or corrupted, unless fragments happen to remain adjacent by chance.

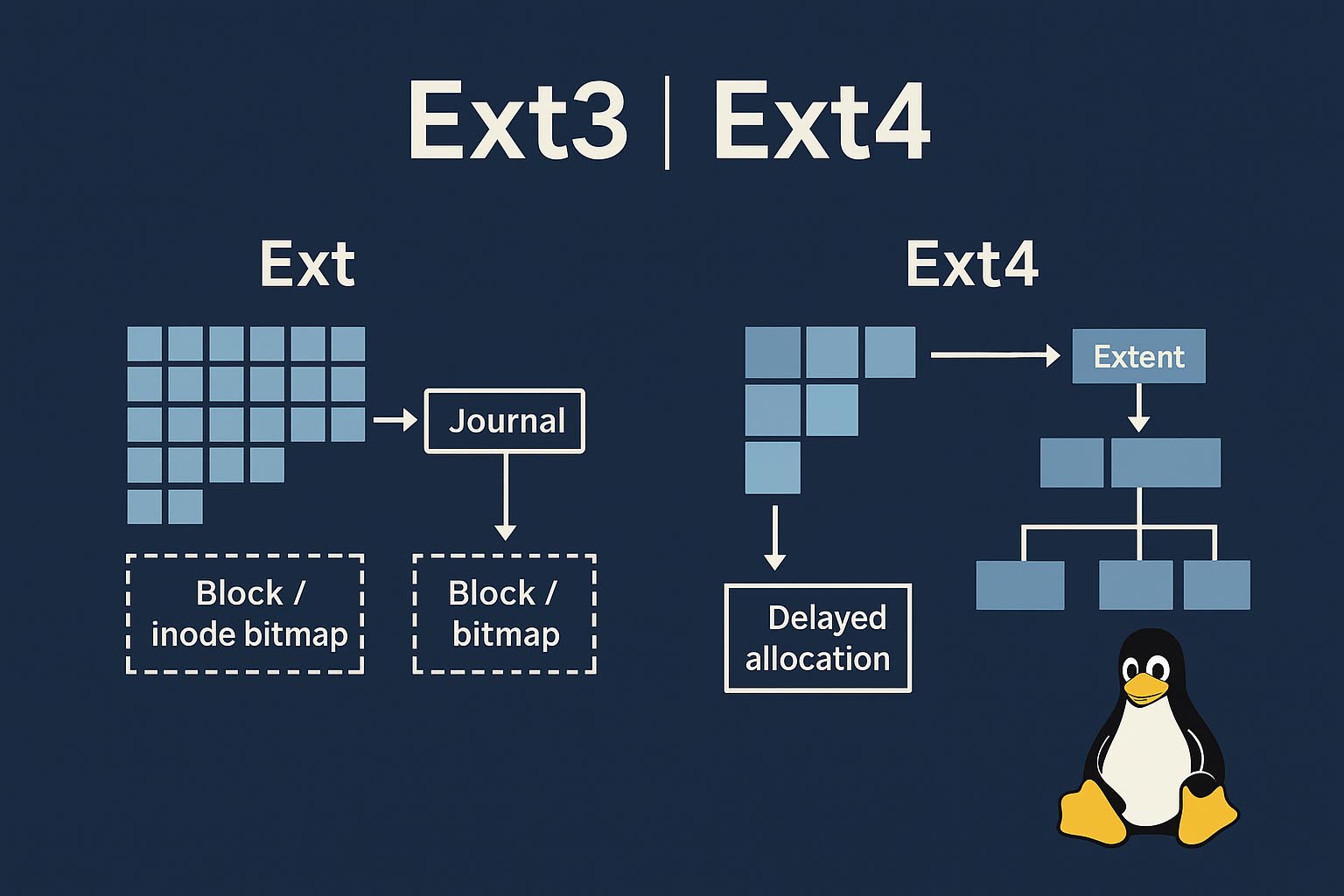

2. Ext3 and Ext4

Ext3 is essentially Ext2 + journaling: every metadata change is first written to a journal (a log) before it’s committed to the main structures, which greatly improves crash consistency. Ext4 evolves the design with extents (contiguous runs of blocks described by start + length), a delayed allocation strategy that batches writes to reduce fragmentation, and an on-disk extent tree (stored inline in the inode for up to ~4 extents, then expanded hierarchically for larger files). Together these features make Ext4 one of the most capable, general-purpose Linux file systems for desktops and servers.

What Happens When You Delete a File (Ext3/4)

The delete operation is recorded in the journal, then the file’s inode is marked free in the Inode Bitmap, and its extents/blocks are released in the Block Bitmap. The directory entry that mapped name → inode is removed (or merged away in the variable-length directory record), breaking the link between the name and the object.

- Non-fragmented files: If the inode hasn’t been reused and the journal still holds recent metadata for that deletion, Disk Drill can reconstruct the file’s content and sometimes its original name/path from journal records. The longer the system runs after deletion, the less likely those journal hints survive.

- Fragmented files: Extents make fragmentation less common, but when present the odds drop: scattered runs are harder to keep intact. Journal metadata may still help for very recent deletions, yet results are more hit-or-miss than with contiguous files.

What Happens When You Format (Ext3/4)

Making a new Ext3/Ext4 file system reinitializes Block Groups (superblock/backup superblocks, group descriptors, bitmaps) and rebuilds inode tables; the journal is reset, discarding prior log entries (some tools lazily initialize structures, but from a recovery standpoint the old directory/inode maps are effectively gone).

- Non-fragmented files: With metadata wiped, recovery falls back to Deep Scan / RAW signature carving. You may get clean payload for contiguous files, but names, folders, and most timestamps are lost unless very recent journal remnants remain.

- Fragmented files: Without surviving inode/extent maps, carving can’t reliably stitch multiple runs together; large or fragmented files are likely to be partial or corrupted.

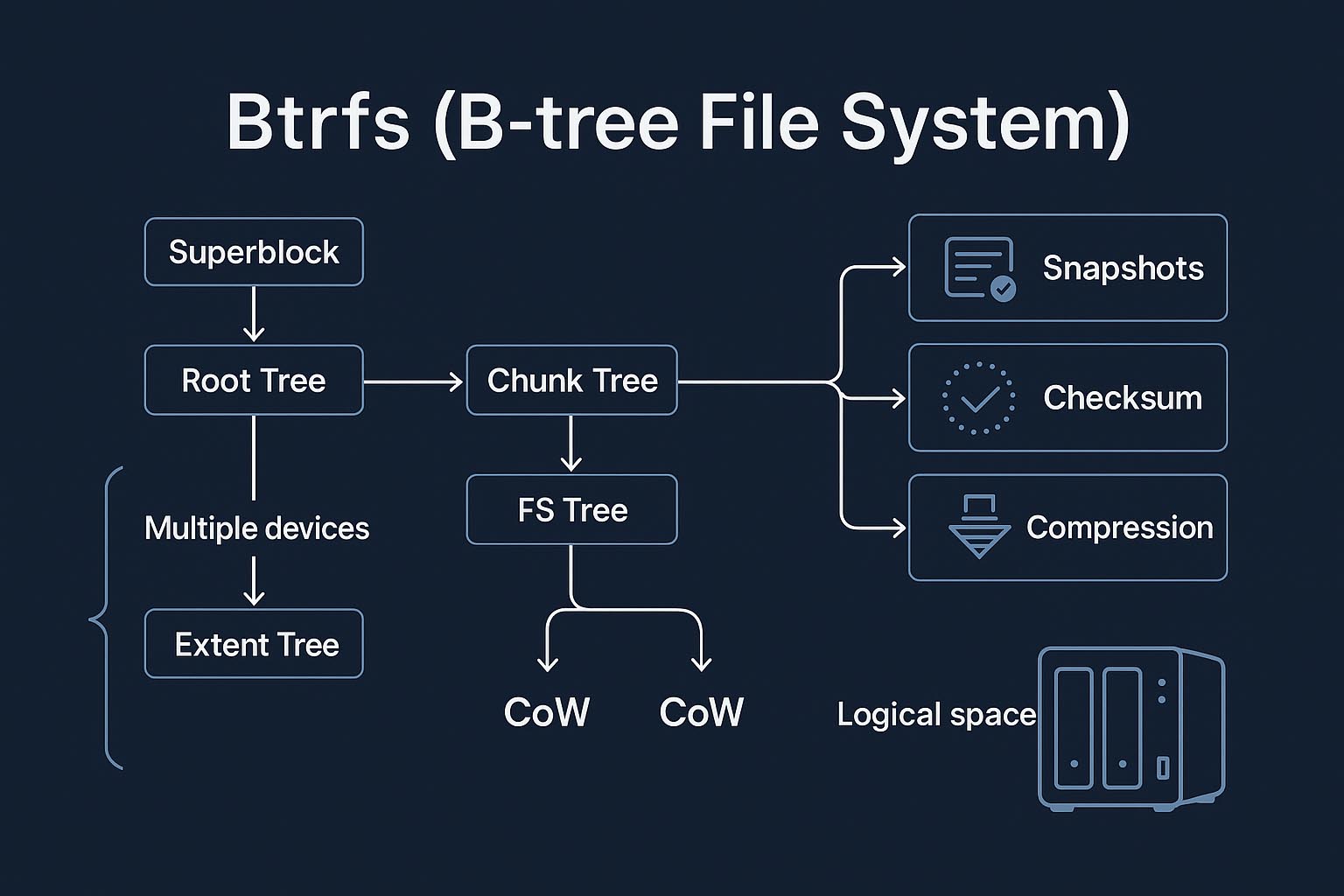

3. Btrfs (B-tree File System)

Btrfs is a next-generation Linux file system designed for everything from laptops to multi-device storage pools. Supported in the mainline kernel since 2009 (and the default on Fedora and openSUSE), it combines a modern on-disk design with features you’d normally expect from a logical volume manager.

Design Goals & Architecture

- All-B-tree layout. Every major structure is a B+-tree: a root points to specialized trees that hold metadata and data mappings.

- Root tree – entry point to the others (its location is noted in the superblock).

- Chunk tree – maps logical addresses → physical devices/offsets and records which devices form the pool.

- Device tree – cross-references physical blocks → logical space.

- File-system (FS) tree – directories, filenames, and inode items (size, perms, timestamps) plus extent items for file data.

- Extent tree – global allocator that tracks all extents in use or free.

- Copy-on-Write (CoW) everywhere. Btrfs doesn’t overwrite critical blocks in place. It writes modified metadata/data to new locations and then atomically flips pointers. This eliminates the need for a traditional journal and reduces corruption risk from crashes.

- Extents, not per-block lists. Files are stored in variable-length extents (start + length). Small files may be stored inline; large files reference one or more extents.

- Built-in pooling. A single Btrfs file system can span multiple devices without LVM. Space is presented as one logical pool, with the chunk/device trees handling placement.

What Happens When You Delete a File (Btrfs)

Deletion updates the FS tree to remove directory items and the file’s inode item, and updates the Extent tree to release its extents. Because of Copy-on-Write (CoW), earlier versions of those tree nodes often still exist on disk until space is reused, and snapshots/subvolumes may continue to reference the same extents.

Disk Drill can frequently walk older FS/Extent tree nodes to rebuild the filename, path, and extent map and restore content—provided those historical nodes and extents haven’t been recycled or trimmed. As allocator activity grows (especially after large/mass deletions), Btrfs reuses space non-sequentially, so chances diminish and results may become partial. If prior metadata is gone, Disk Drill falls back to signature (RAW) carving, which recovers content but usually loses names and folders.

What Happens When You Format (Btrfs)

Creating a new Btrfs file system reinitializes the superblock and core trees (FS/Chunk/Extent), so the old trees are no longer referenced even though many blocks may still hold prior bytes until reused.

Success hinges on discovering orphaned copies of former tree blocks and extent items; when found, Disk Drill can reconstruct directories and file layouts. If not, recovery degrades to RAW carving without original names/paths and with a higher risk of incomplete large files. Multi-device pools add complexity because the old logical↔physical mappings must also be rediscovered, and ongoing writes or TRIM quickly erode what remains.

Conclusion: No Promises — But You Can Get Certainty

There’s no universal guarantee in data recovery. Physics (overwrites), firmware (SSD TRIM/garbage collection), formats (full format zero-fill, crypto-erase), and design choices (journaling, copy-on-write, fragmentation, encryption) set hard limits. That said, you don’t have to guess: Disk Drill Basic lets you scan and Preview the exact file before you pay. If the Preview opens, you have practical proof the content is intact.