Here at Cleverfiles, the authenticity and reliability of the material we provide are important to us. Behind every review and tutorial stands a cross‑functional crew: data‑recovery engineers who spend their days in professional labs, dedicated QA specialists who stress‑test software on a wide range of hardware, and an editorial desk that turns raw findings into readable guidance.

Below, you’ll find exactly who runs the tests, what we test, and how each review or test moves from first idea to publication.

What We Cover

We benchmark and compare:

| Desktop software | Mobile apps |

| Data‑recovery suites for Windows, macOS, and Linux. | iOS and iPadOS recovery apps, iPhone and iPad storage cleaners, and file organizers. |

We track every active player in these fields, watch release notes, and add newcomers to our queue the moment they ship. All titles, whether commercial or free, enter the same pipeline, so a newcomer competes on equal ground with long‑established brands.

The Team Behind Every App Test

At CleverFiles, our reviewers are experts with deep experience in data recovery and software analysis. With decades of combined experience, they are constantly interacting with technology, so each review is backed by extensive hands-on testing and relevant knowledge.

Brett Johnson is an accomplished Data Recovery Specialist who spent seven years perfecting his skills at Seagate Technology before joining ACE Data Recovery in 2015. With a Bachelor’s degree in Computer Systems Networking and Telecommunications, he brings his extensive expertise to the verification and validation of every piece of content we publish.

Meanwhile, Alexei Vaschenko, Lead Quality Assurance Engineer, and Oleksandr Lukashyn, QA Specialist, are long-time Cleverfiles team members that apply over five years of rigorous, methodical testing to each application and device configuration, capturing every nuance of their work, interaction with devices and operating systems, to guarantee that no detail is overlooked.

David Morello, our Senior Editor, draws on his decades of experience in tech content writing to create engaging and reader-friendly articles that bring complex technology concepts to life. His expertise spans data recovery, cybersecurity, software development, and more, providing the perfect balance of depth and accessibility.

| Role | Name(s) | What They Do |

| Data Recovery Experts | Brett Johnson | Validate technical accuracy against real lab results |

| QA Specialists | Alex Vaschenko, Alex Lukashyn | Run every test and record the raw data |

| In‑house Editors | Our editorial team | Turn results into clear, practical guides |

| Senior Editor | David Morelo | Gives the final sign‑off before publication |

Our core team has spent more than a decade building, breaking, and rescuing storage devices. Every review and guide you read on CleverFiles rests on that hands‑on background.

How Our Software Test Workflow Looks

Every software review you read is at the end of this five-step cycle and re-enters it whenever there are changes in data recovery approaches, software updates, or user needs.

Step 1: Topic Planning

Editors begin with user pain points. They scan discussion boards, support tickets, and price lists, then select common solutions and desktop applications or mobile apps that claim to solve those exact problems. The goal is a test list that mirrors what real people do or install when they lose files or run out of space.

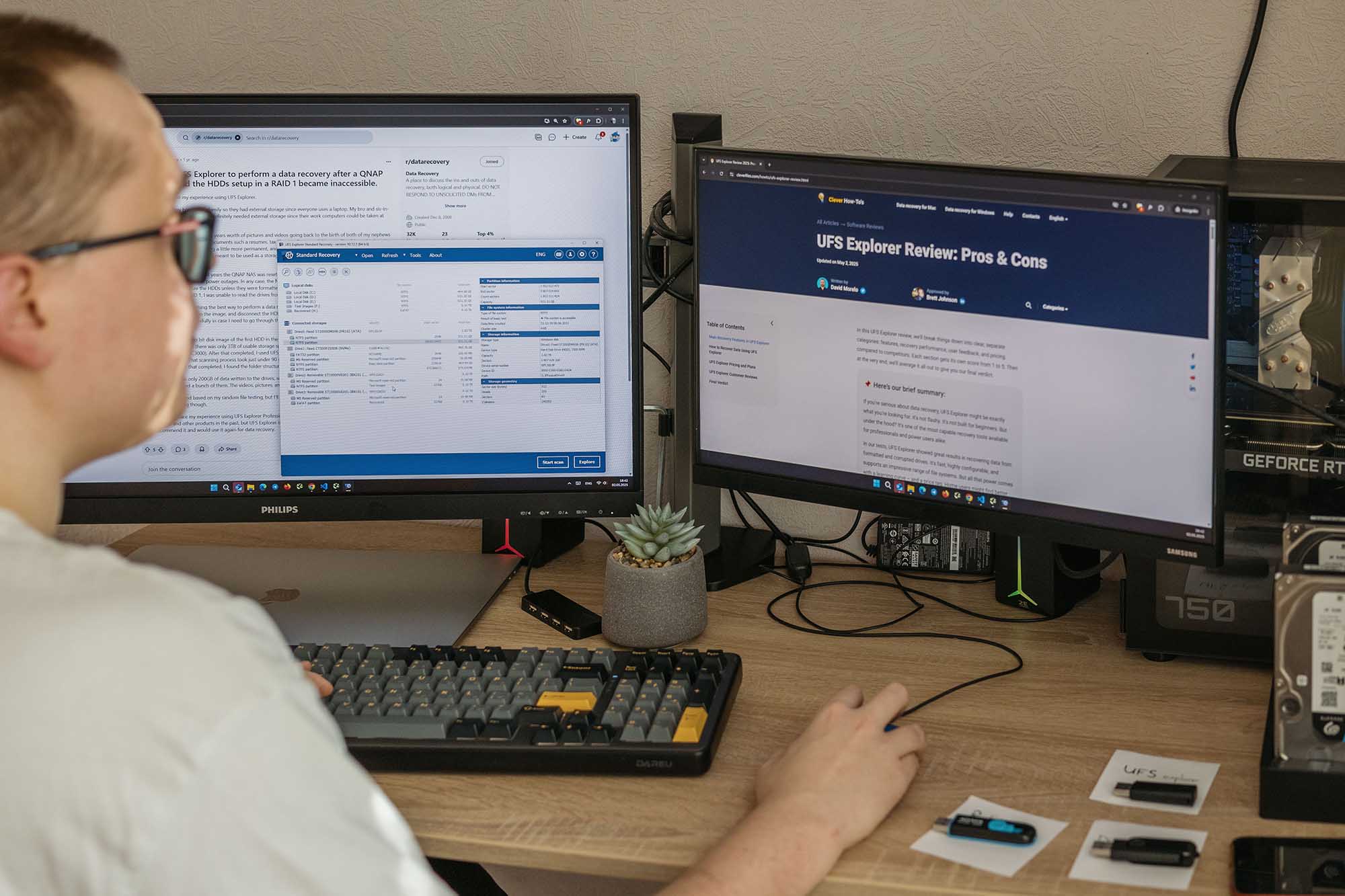

Step 2: Hands‑On Testing

Our QA specialists thoroughly test desktop recovery suites and iOS cleaner/organizer apps. On the desktop side, they install each program on Windows and macOS testing stands and walk through every advertised feature. That typically includes different data loss scenarios, all scan modes available in the tool, byte‑to‑byte imaging, how long each task takes, and how many files return intact. And of course, they confirm whether all the developers’ claims are comparable to actual results.

For iPhone and iPad utilities, the same specialists run each app on multiple iOS versions, measure the space reclaimed after duplicate‑photo removal, cache‑clearing, media compression routines, and log any issues.

Step 3: Editorial Review

Editors take raw test results and turn them into a reader-friendly guide. This means ranking the results according to typical user questions, adding context about price levels and hidden costs, adding screenshots, and incorporating practical tips that popped up during personal testing.

Step 4: Expert Verification

Data‑recovery engineers perform a technical proofread. They verify that terminology is precise, success‑rate claims match industry experience, and any risk warnings are accurate. When something can be done faster, more safely, or with an extra command‑line switch, they add those notes. The goal is to align every recommendation with best practices they see daily in the professional recovery lab.

Step 5: Publication & Updates

Once the Senior Editor signs off, the review goes live. We revisit each piece when an app ships a major update, prices change, or readers report new issues.

Our tests and reviews can be trusted because we are strongly committed to these principles:

- No pay‑for‑placement. All software we tested gets its score through regular testing, not sponsorship.

- Real hardware, real failures. All tests we run are reproduced by our QA specialists in real conditions and on real devices.

- Live updates. Major OS releases, pricing shifts, or feature overhauls trigger a fresh test pass.

- Open ears. Comment threads, support tickets, and social channels feed into our backlog, so the next update answers the questions you raise today.

How We Test Data Recovery Software

Our testing approaches differ depending on the OS platform for which the software is being tested. Our QA specialists have unlimited access to all necessary types of devices and software. This includes problematic devices with various malfunctions, allowing them to test software and problem-solving techniques in real-world conditions.

What We Measure on Windows

Our Windows bench includes legacy BIOS PCs, modern UEFI builds, and virtual machines for unsafe samples. We stage NTFS, exFAT, FAT32, and ReFS volumes, then trigger typical failures: accidental deletion, quick format, damaged partition tables, and multi‑disk RAID degradation. Recovery speed is clocked on three datasets (mixed office files, raw photos, and fragmented video). We also verify how well a program reconstructs original folder trees and filenames.

We assign a score to every product we review. The weight of each factor depends on the problem the software claims to solve.

| Metric | What We Measure | Weight |

| Recovery Success Rate | • Files restored from NTFS, exFAT, FAT32, and ReFS drives after Shift + Delete, quick format, or partition-table damage. • Ability to keep original names, timestamps, and folder layout. • Handling of bad sectors and SMART-warning disks. |

35 % |

| File-Type & File-System Support | • Breadth of signature library (Office docs, RAW photos, 4 K/8 K video, VM images). • Support for BitLocker volumes (key or recovery-key unlock). • Rebuild quality on Windows Storage Spaces and Intel/AMD RAID. |

20 % |

| Scan Speed & Depth | • Time to first result and full-scan duration. • Ability to pause/resume, skip bad blocks, and throttle I/O on failing drives. • Responsiveness when TRIM is active. |

15 % |

| Ease of Use | • Driver prompts, UAC requests, and need for “WinPE” boot media. • Clarity of status messages and recovery-destination warnings. • Preview quality (hex, thumbnail, text) before export. |

15 % |

| Price & License Limits | • One-PC vs. tech-license cost. • Limits in the free tier (file-size cap, export block). • Extra fees for RAID or forensic modules. |

10 % |

| Support & Documentation | • Ticket reply time, availability of live chat or phone. • Depth of Windows-specific guides (BitLocker unlock, ReFS quirks). • Changelog frequency. |

5 % |

MacOS Software Testing Criteria

Mac testing spans similar data loss scenarios, but here we also consider software interaction with Intel and Apple Silicon M1-M4/T2 chips, bootable APFS and HFS+ drives, Time Machine targets, and encrypted volumes. Extra attention goes to how a tool handles APFS snapshots and system volumes.

| Metric | What We Measure | Weight |

| Recovery Success Rate | • Files restored from APFS (incl. snapshots, Sealed System Volume), HFS+, and FAT/exFAT externals lost after file or partition deletion and erasure scenarios. • Success on Intel, T2, and Apple Silicon (M1-M4) Macs, tested on various Mac and MacBook models, Target Disk Mode recovery. |

35 % |

| File-Type & File-System Support | • Signature coverage for macOS-centric formats (Pages, Numbers, Logic, ProRes, HEIF/HEVC). • Ability to unlock FileVault-encrypted volumes with password or recovery key. • Fusion Drive and Core Storage handling. |

20 % |

| Scan Speed & Depth | • Time to first result and full-scan duration. • Stability when system integrity protection blocks direct disk access (workarounds via bootable USB or deep driver). • Pause/resume scanning without breaking the recovery process. |

15 % |

| Ease of Use | • Need to disable System Integrity Protection, grant Full Disk Access, or boot from external media. • Clear warnings about writing to the source disk on APFS SSDs that use TRIM. • Quality of preview for package files (Photos library, Mail). |

15 % |

| Price & License Limits | • Single-Mac vs. cross-platform licenses. • Trial restrictions (preview-only, file-size cap). • Extra charge for iOS-device or RAID modules. |

10 % |

| Support & Documentation | • Response time, native-speaker support, availability of live chat. • Up-to-date guides for Monterey → Sequoia, Apple Silicon boot quirks, and T2 Secure Boot settings. • Update cadence when macOS releases change security layers. |

5 % |

How We Test iOS Apps

Unlike desktop operating systems, iOS/iPadOS apps run on different models of iPhones and iPads. We create controlled scenarios—deleted photos, wiped messages, cluttered galleries, etc.. Then, check whether a cleaner or recovery app can reach that data or accomplish the task at hand. Battery drain, privacy prompts, and on‑device AI photo classification are timed and recorded. We also track how much space a cleaning routine actually frees on repeated runs.

| Metric | What We Measure | Weight |

| Recovery / Cleanup Success | • For recovery apps: percentage of test files (photos, videos, messages, contacts, app data) restored in our lab scenarios. • For cleaners: amount of redundant data removed without false positives. |

30 % |

| File‑ & Scenario Coverage | • Deleted vs. existing data, corrupted backups, iCloud sync issues, Live Photos, HEIF/HEVC, third‑party chat attachments. • Ability to work across different iOS/iPadOS versions (iOS 15-18). |

20 % |

| Privacy & Security | • On‑device processing (no forced cloud upload). • Clear data‑handling policy, no intrusive permissions, no jailbreak/root required. |

10 % |

| Ease of Use | • Clarity of on‑screen prompts, risk warnings, and progress indicators. • Quality of step‑by‑step guidance for non‑technical users. |

10 % |

| Performance | • Scan or cleanup speed on various iPhone and iPad models. • Battery drain and thermal impact during long scans. |

10 % |

| Pricing Transparency | • Up‑front disclosure of one‑time vs. subscription fees. • Limitations in the free tier (file‑size caps, export blocks, ads). |

10 % |

| Support & Updates | • How the developer communicates with consumers. • Frequency of app updates and iOS/iPadOS compatibility patches. |

5 % |

| Documentation & Community Feedback | • Quality of in‑app help and web knowledge base. • User ratings and verified reviews on the App Store and forums. |

5 % |